"The intelligence of AI systems is being overhyped and, while we could get there eventually, we are currently nowhere near achieving artificial general intelligence (AGI)."

Those are the words of Gary Marcus, Professor Emeritus of Psychology and Neural Science at New York University, as he pours cold water on the 'AI Boom' that has almost single-handedly supported the entire stock market for the last month.

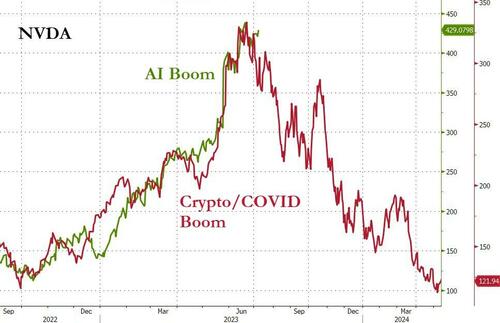

We have seen these hype-cycles before...

Source: Bloomberg

In a conversation with Goldman Sachs' Jenny Grimberg, Marcus explains how generative artificial intelligence (AI) tools actually work today?

At the core of all current generative AI tools is basically an autocomplete function that has been trained on a substantial portion of the internet.

These tools possess no understanding of the world, so they’ve been known to hallucinate, or make up false statements.

The tools excel at largely predictable tasks like writing code, but not at, for example, providing accurate medical information or diagnoses, which autocomplete isn’t sophisticated enough to do.

Contrary to what some may argue, the professor explains that these tools don’t reason anything like humans.

AI machines are learning, but much of what they learn is the statistics of words, and, with reinforcement learning, how to properly respond to certain prompts.

They’re not learning abstract concepts.

That’s why much of the content they produce is garbage and/or false.

Humans have an internal model of the world that allows them to understand each other and their environments.

AI systems have no such model and no curiosity about the world. They learn what words tend to follow other words in certain contexts, but human beings learn much more just in the course of interacting with each other and with the world around them.

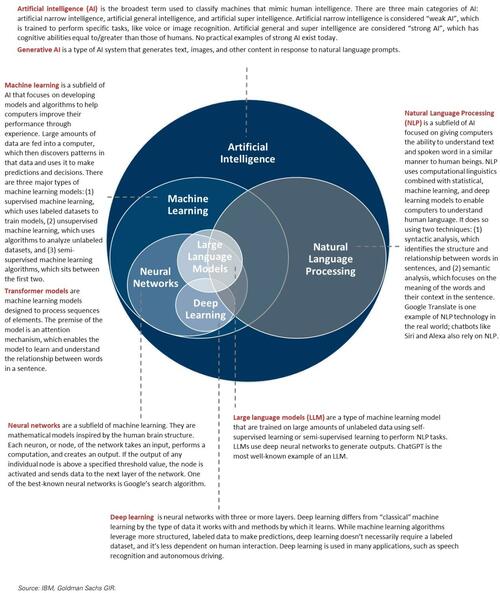

Artificial intelligence (AI) is the science of creating intelligent machines. AI is a broad concept that encompasses several different subfields, including machine learning, natural language processing, neural networks, and deep learning.

So, is the hype around generative AI overblown?

Marcus responds like any good academic - "Yes and no."

Generative AI tools are no doubt materially impacting our lives right now, both positively and negatively.

They’re generating some quality content, but also misinformation, which, for example, could have significant adverse consequences for the 2024 US presidential election.

But the intelligence of AI systems is being overhyped.

A few weeks ago, it was claimed that OpenAI’s GPT-4 large language model (LLM) passed the undergraduate exams in engineering and computer science at MIT, which stirred up a lot of excitement. But it turned out that the methodology was flawed, and in fact my long-time collaborator Ernie Davis pointed that out around a year ago, yet people still proceeded to use it.

We are nowhere near achieving artificial general intelligence (AGI). Those who believe AGI is imminent are almost certainly wrong.

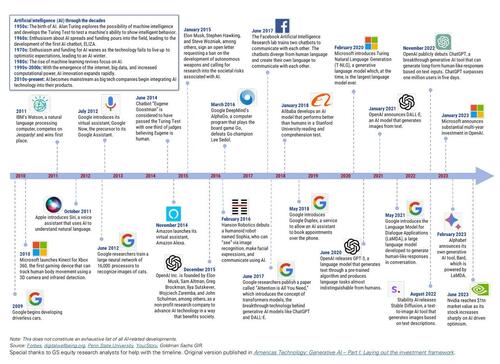

Here's how we got here...

Finally, Marcus explains that his biggest concern is that we’re giving an enormous amount of power and authority to the small number of companies that currently control AI systems, and in subtle ways that we may not even be aware of.

The data on which LLMs are trained can have bias effects on the model output, which is disquieting given that these systems are starting to shape our beliefs. Another concern is around the truthfulness of AI systems - as mentioned, they’ve been known to hallucinate.

Bad actors can use these systems for deliberate abuse, from spreading harmful medical misinformation to disrupting elections, which could gravely threaten society.

Be wary of the hype, Marcus concludes, AI is not yet as magical as many people think.

I wouldn’t go so far as to say that it’s too early to invest in AI; some investments in companies with smart founding teams that have a good understanding of product market fit will likely succeed. But there will be a lot of losers. So, investors need to do their homework and perform careful due diligence on any potential investment. It’s easy for a company to claim that they’re an AI company, but do they have a moat around them? Do they have a technical or data advantage that makes them likely to succeed? Those are important questions for investors to be asking.