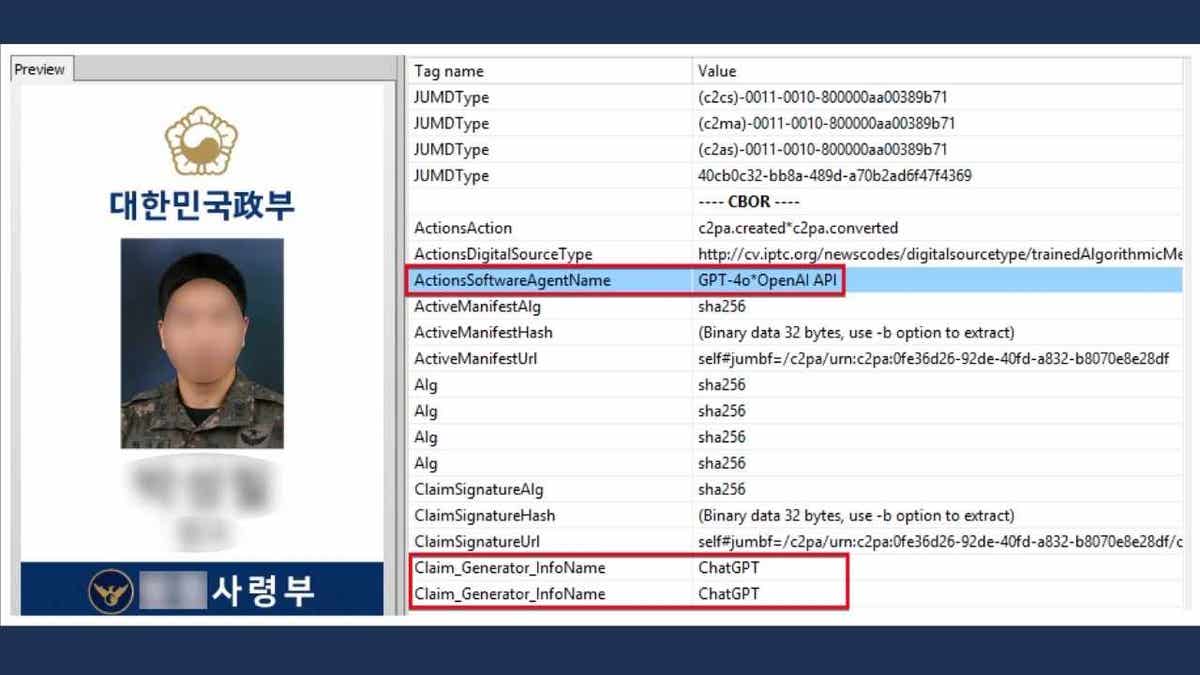

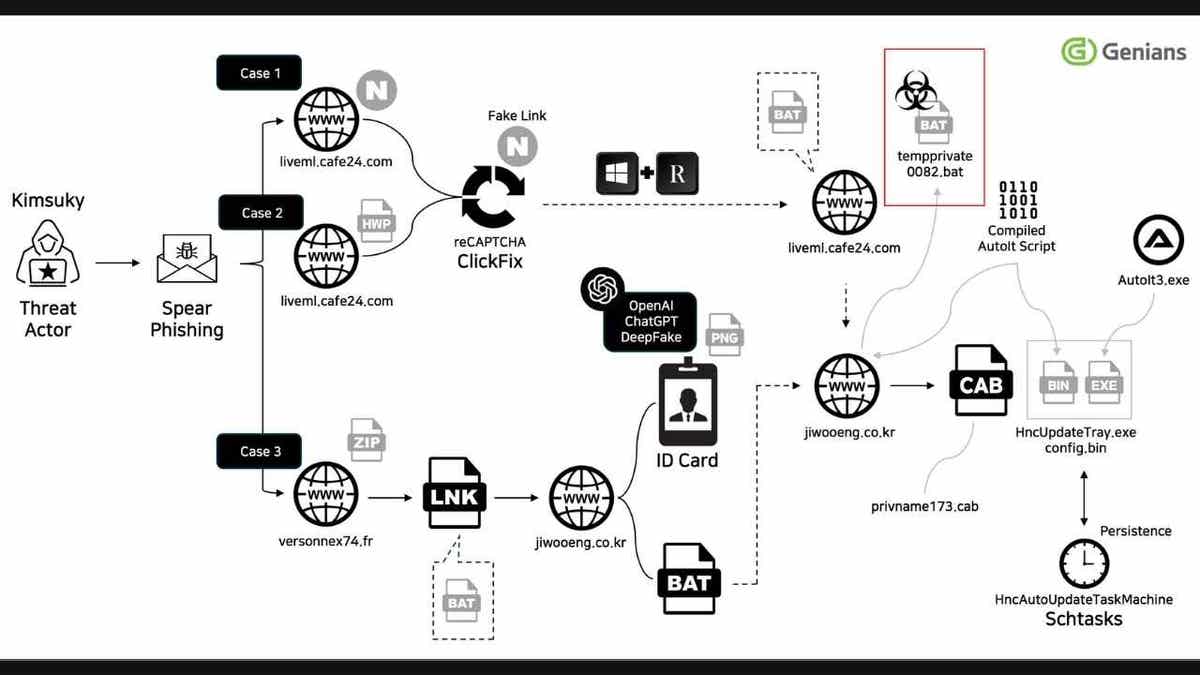

A North Korean hacking group, known as Kimsuky, used ChatGPT to generate a fake draft of a South Korean military ID. The forged IDs were then attached to phishing emails that impersonated a South Korean defense institution responsible for issuing credentials to military-affiliated officials. South Korean cybersecurity firm Genians revealed the campaign in a recent blog post. While ChatGPT has safeguards that block attempts to generate government IDs, the hackers tricked the system. Genians said the model produced realistic-looking mock-ups when prompts were framed as "sample designs for legitimate purposes."

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide — free when you join my CyberGuy.com/Newsletter

Example of an AI-Generated Virtual ID card. (Genians)

Kimsuky is no small-time operator. The group has been tied to a string of espionage campaigns against South Korea, Japan and the U.S. Back in 2020, the U.S. Department of Homeland Security said Kimsuky was "most likely tasked by the North Korean regime with a global intelligence-gathering mission." Genians, which uncovered the fake ID scheme, said this latest case underscores just how much generative AI has changed the game.

"Generative AI has lowered the barrier to entry for sophisticated attacks. As this case shows, hackers can now produce highly convincing fake IDs and other fraudulent assets at scale. The real concern is not a single fake document, but how these tools are used in combination. An email with a forged attachment may be followed by a phone call or even a video appearance that reinforces the deception. When each channel is judged in isolation, attacks succeed. The only sustainable defense is to verify across multiple signals such as voice, video, email, and metadata, in order to uncover the inconsistencies that AI-driven fraud cannot perfectly hide," Sandy Kronenberg, CEO and Founder of Netarx, a cybersecurity and IT services company, warned.

North Korea is not the only country using AI for cyberattacks.

HACKER EXPLOITS AI CHATBOT IN CYBERCRIME SPREE

North Korea is not the only country using AI for cyberattacks. Anthropic, an AI research company and the creator of the Claude chatbot, reported that a Chinese hacker used Claude as a full-stack cyberattack assistant for over nine months. The hacker targeted Vietnamese telecommunications providers, agriculture systems and even government databases.

According to OpenAI, Chinese hackers also tapped ChatGPT to build password brute-forcing scripts and to dig up sensitive information on US defense networks, satellite systems and ID verification systems. Some operations even leveraged ChatGPT to generate fake social media posts designed to stoke political division in the US.

Google has seen similar behavior with its Gemini model. Chinese groups reportedly used it to troubleshoot code and expand access into networks, while North Korean hackers leaned on Gemini to draft cover letters and scout IT job postings.

GOOGLE AI EMAIL SUMMARIES CAN BE HACKED TO HIDE PHISHING ATTACKS

The above features an ilustration of a hackers' attack scenario. (Genians)

Cybersecurity experts say this shift is alarming. AI tools make it easier than ever for hackers to launch convincing phishing attacks, generate flawless scam messages, and hide malicious code.

"News that North Korean hackers used generative AI to forge deepfake military IDs is a wake-up call: The rules of the phishing game have changed, and the old signals we relied on are gone," Clyde Williamson, Senior Product Security Architect at Protegrity, a data security and privacy company, explained. "For years, employees were trained to look for typos or formatting issues. That advice no longer applies. They tricked ChatGPT into designing fake military IDs by asking for 'sample templates.' The result looked clean, professional and convincing. The usual red flags — typos, odd formatting, broken English — weren't there. AI scrubbed all that out."

"Security training needs a reset. We need to teach people to focus on context, intent and verification. That means encouraging teams to slow down, check sender info, confirm requests through other channels and report anything that feels off. No shame in asking questions," Williamson added. "On the tech side, companies should invest in email authentication, phishing-resistant MFA and real-time monitoring. The threats are faster, smarter and more convincing. Our defenses need to be too. And for individuals? Stay sharp. Ask yourself why you’re getting a message, what it’s asking you to do and how you can confirm it safely. The tools are evolving. So must we. Because if we don’t adapt, the average user won’t stand a chance."

HOW AI CHATBOTS ARE HELPING HACKERS TARGET YOUR BANKING ACCOUNTS

Staying safe in this new environment requires both awareness and action. Here are steps you can take right now:

If you get an email, text or call that feels urgent, pause. Verify the request by contacting the sender through another trusted channel before you act. At the same time, protect your devices with strong antivirus software to catch malicious links and downloads.

The best way to safeguard yourself from malicious links that install malware, potentially accessing your private information, is to have strong antivirus software installed on all your devices. This protection can also alert you to phishing emails and ransomware scams, keeping your personal information and digital assets safe.

Get my picks for the best 2025 antivirus protection winners for your Windows, Mac, Android & iOS devices at CyberGuy.com/LockUpYourTech

Reduce your risk by scrubbing personal information from data broker sites. These services can help remove sensitive details that scammers often use in targeted attacks. While no service can guarantee the complete removal of your data from the internet, a data removal service is really a smart choice. They aren’t cheap, and neither is your privacy. These services do all the work for you by actively monitoring and systematically erasing your personal information from hundreds of websites. It’s what gives me peace of mind and has proven to be the most effective way to erase your personal data from the internet. By limiting the information available, you reduce the risk of scammers cross-referencing data from breaches with information they might find on the dark web, making it harder for them to target you.

Check out my top picks for data removal services and get a free scan to find out if your personal information is already out on the web by visiting CyberGuy.com/Delete

Get a free scan to find out if your personal information is already out on the web: Cyberguy.com/FreeScan

Look at the email address, phone number or social media handle. Even if the message looks polished, a small mismatch can reveal a scam.

Turn on multi-factor authentication (MFA) for your accounts. This adds an extra layer of protection even if hackers steal your password.

Update your operating system, apps and security tools. Many updates patch vulnerabilities that hackers try to exploit.

If something feels off, report it to your IT team or your email provider. Early reporting can stop wider damage.

Ask yourself why you are receiving the message. Does it make sense? Is the request unusual? Trust your instincts and confirm before taking action.

AI is rewriting the rules of cybersecurity. North Korean and Chinese hackers are already using tools like ChatGPT, Claude, and Gemini to break into companies, forge identities, and run elaborate scams. Their attacks are cleaner, faster, and more convincing than ever before. Staying safe means staying alert at all times. Companies need to update training and build stronger defenses. Everyday users should slow down, question what they see, and double-check before trusting any digital request.

Do you believe AI companies are doing enough to stop hackers from misusing their tools or is the responsibility falling too heavily on everyday users? Let us know by writing to us at CyberGuy.com/Contact

Sign up for my FREE CyberGuy Report

Get my best tech tips, urgent security alerts, and exclusive deals delivered straight to your inbox. Plus, you’ll get instant access to my Ultimate Scam Survival Guide — free when you join my CyberGuy.com/Newsletter

Copyright 2025 CyberGuy.com. All rights reserved.