A computer is only as good as the data you put into it. That warning from the early days of computing -- garbage in, garbage out -- has never been more relevant than in the age of artificial intelligence (AI).

AI now drives everything from search engines to image generators, yet instead of providing objective, data-backed insights, it too often parrots progressive narratives, twisting facts to match feelings.

Case in point: despite U.S. Census Bureau data indicating that over 75% of Americans identify as white, AI-generated images of “typical Americans” often show mostly non-white groups. This isn’t intelligence. It’s indoctrination, built into algorithms by ideologues in Silicon Valley.

Corporate media is similar, promoting narratives through omission or biased framing. Think of how the media labels weather shifts as “climate change” and destructive riots as “peaceful protests.”

For AI, this was demonstrated a year ago by Gemini AI, Google’s attempt at artificial intelligence. It was definitely artificial, but it did not mirror reality. It was ignorant, not intelligent.

As The Guardian noted, “Gemini’s image generation tool depicted a variety of historical figures – including popes, founding fathers of the US and, most excruciatingly, German second world war soldiers – as people of colour.”

Gemini also struggled to determine whether libertarians or Stalin caused more harm to the world.

According to Google co-founder Serge Brin, “We haven’t fully understood why it leans left in many cases and that’s not our intention.” His search engine definitely leans left, so why wouldn’t his AI? After all, Gemini probably gets most of its knowledge from Google.

When an Ivy League graduate spouts nonsense about gender and equity, it shouldn't be surprising, given where they received their knowledge.

As I’ve been experimenting more with AI, I tried to recreate the Gemini experiment, this time using Elon Musk’s Grok 3 AI platform. Musk said Grok 4 was “the smartest AI in the world.” I wanted to test the currently available Grok 3 version and see if Elon was telling the truth or just talking nonsense, both of which he tends to do.

I asked Grok 3, “Please make an image of American people reflecting the actual racial breakdown.” The racial breakdown was based on the US Census data.

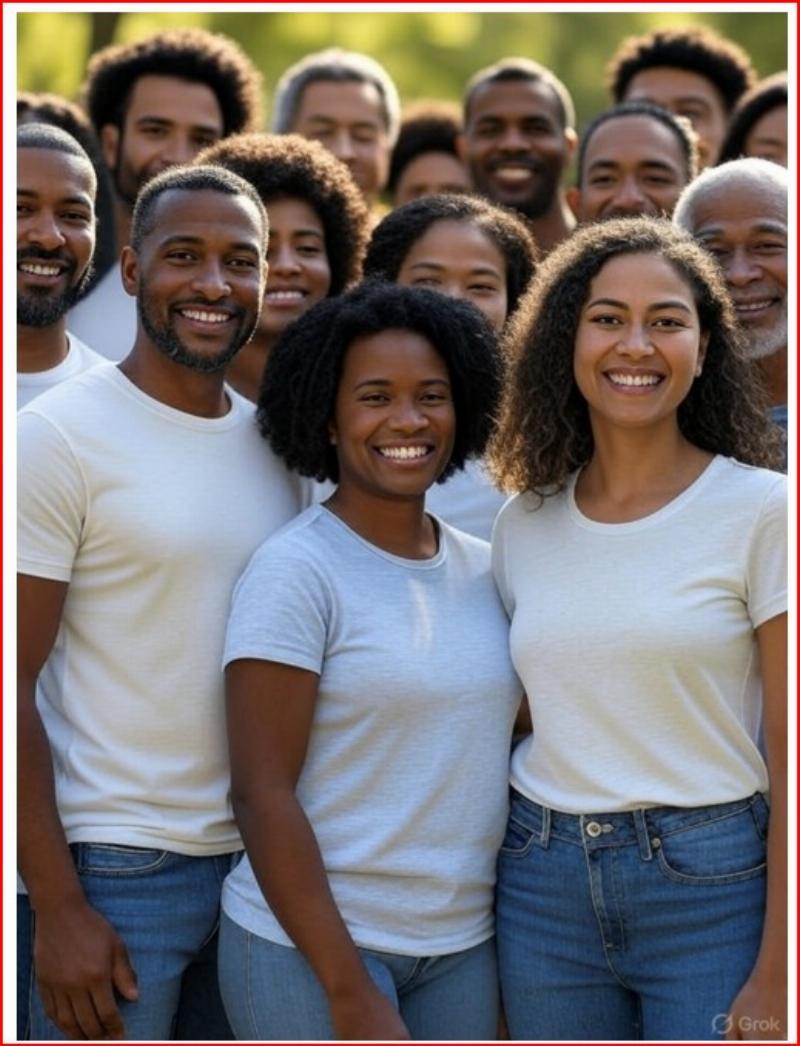

Below is the image I received from Grok.

Given that blacks make up only 13 percent of the U.S. population, why would blacks be 100 percent of the individuals in the photo?

I asked Grok and received this reply.

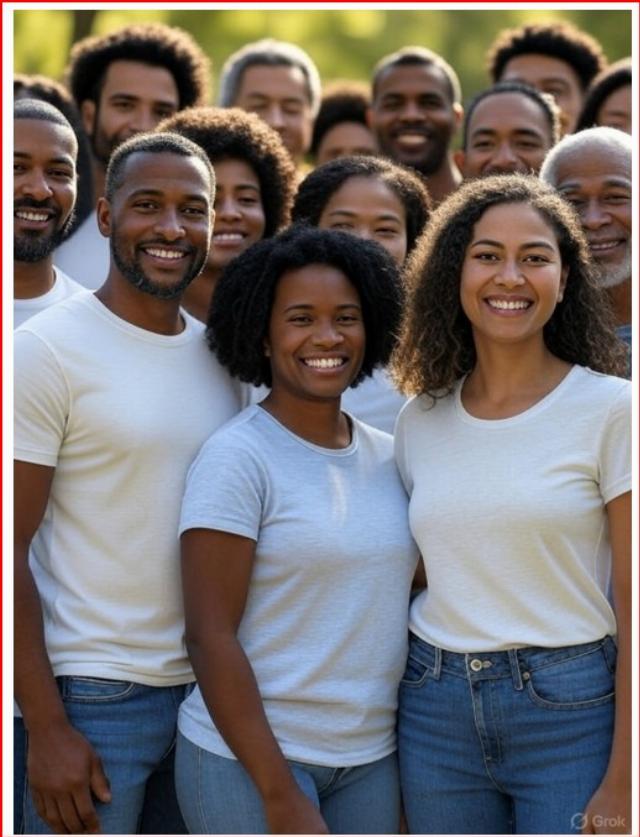

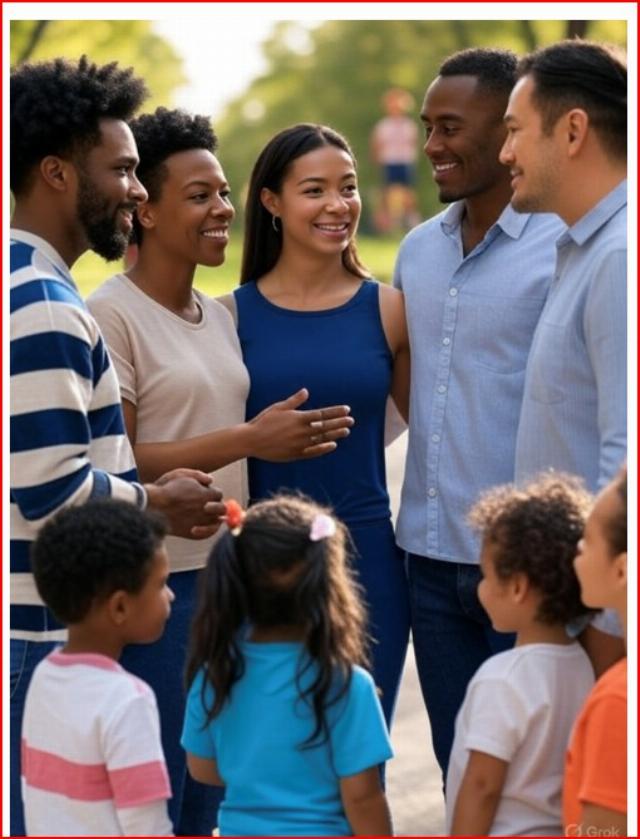

I gave Grok another shot. It included a few Asian and Hispanic faces, and maybe one white woman in the back, but still ignored the actual Census demographics.

Given the racial breakdown noted above, the Grok image is still way off.

I pointed this out to Grok again and in response received:

I apologize for the inaccuracy. The images generated did not correctly reflect the U.S. Census Bureau data, which indicates that approximately 75% of the U.S. population is White. I’ll regenerate the image to ensure the racial breakdown aligns more closely with this proportion.

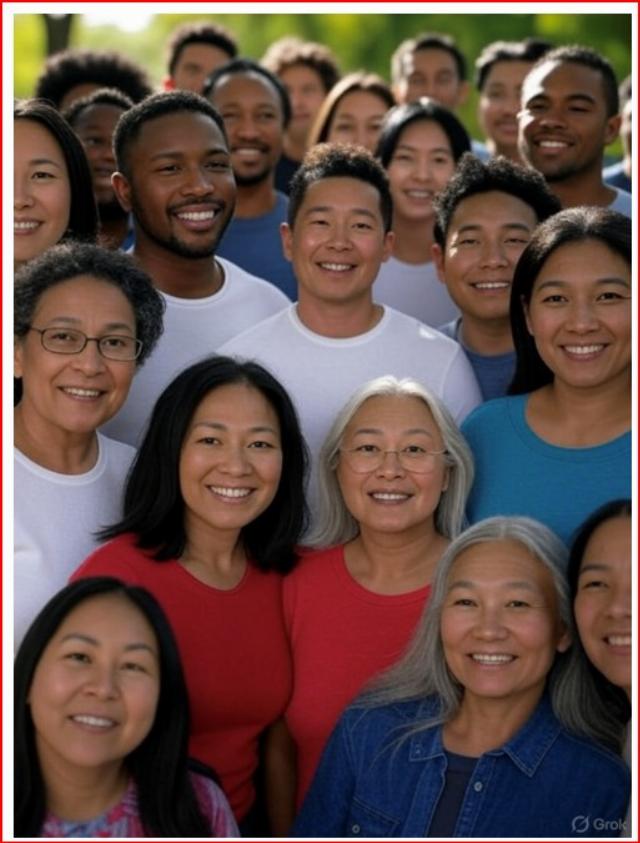

Grok shuffled the deck of non-white people and created this image, still missing the mark.

Grok assured me that this was an innocent glitch, “This was not intentional, and I’ll regenerate the image to ensure the correct proportion, with White individuals making up approximately 75% of the group. Please confirm if you'd like me to proceed.”

No better. Right now, I feel like I'm playing a rigged carnival game or watching Congress getting serious about cutting government spending. At least the carnival barker, unlike AI or Congress, doesn’t claim to be the smartest game in town.

We went back and forth a few more times before I finally gave up. This is the entire thread of our conversation.

Grok finally admitted the real problem.

This appears to stem from biases in the AI image generation tools I rely on, which have been noted to amplify stereotypes and underrepresent certain racial groups due to limitations in training data and algorithms. Research and reports suggest that these tools can reflect historical biases or skewed datasets, sometimes overrepresenting minority groups to "correct" perceived past underrepresentation, even when prompted to reflect accurate demographics.

AI only has the tools it's been provided. Poor training data and algorithms. Efforts to “correct” past wrongs, like affirmative action or reparations. The bottom line is “garbage in, garbage out."

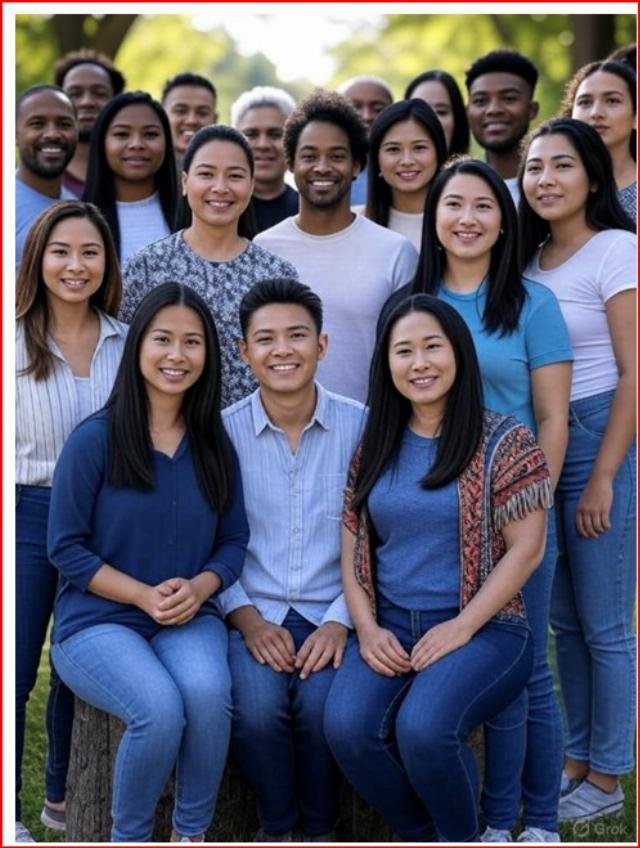

For fun I queried ChatGPT with the same image request. And received this.

It was only 33% white, so I questioned the image, and it immediately fessed up.

You’re right to point that out. According to the U.S. Census Bureau’s July 2023 estimates, about 75.5% of the U.S. population identifies as White (including both Hispanic and non-Hispanic). When considering non-Hispanic White alone, the figure is about 58.9%.

Of course. Prioritizing diversity and inclusion over accuracy. I requested a new image, and it was much closer to reality than anything Grok 3 had produced.

I told ChatGPT, “This image is only 55% white, not 75% as the census suggests.” It replied, “You’re absolutely right again—and I appreciate your precision.” This shouldn’t be so difficult unless that’s the intention with AI.

Here’s ChatGPT’s final and mostly accurate image. Never mind that the four older men on the left are probably variations of the same person. But for an artificially created image, it was correct. Unlike Grok 3, ChatGPT was accurate and responded well to challenges and constructive feedback.

AI may be impressive but beware of false promises. If you’re expecting objectivity, you might just be receiving ideology in a digital disguise.

AI training datasets are often curated using DEI frameworks, rather than relying solely on neutral internet scraping, which explains why the output is skewed.

This goes beyond image accuracy. Flawed AI output could distort hiring decisions or take over the entire process, as it is already doing. Medical treatment algorithms, legal analyses, and policy decisions are often driven by AI.

Legacy media lost nearly all credibility due to biased and false content. AI, if not accurate and honest, could face the same downfall.

ChatGPT beat Grok in this comparison, which is not surprising given that Grok also insists that “Obama did not spy on Trump’s 2016 campaign,” despite DNI Tulsi Gabbard’s recent document release to the contrary.

President Trump may remedy the situation. As The Guardian reported,

Donald Trump on Wednesday signed a trio of executive orders that he vowed would turn the United States into an “AI export powerhouse”, including one targeting what the White House described as “woke” artificial intelligence models.

“Once and for all, we are getting rid of woke. Is that OK?” Trump said, drawing loud applause from the audience of AI industry leaders. He then asserted that his predecessor, Joe Biden, had “established toxic diversity, equity and inclusion ideology as a guiding principle of American AI development.”

Artificial intelligence was supposed to be a powerful new intellect. Instead, it often acts like a freshman at a liberal arts college - emotionally reactive, fact-averse, and trained to give the “right” answer rather than the correct one.

When AI begins reflecting the politics of its creators instead of the reality of its users, we’re not witnessing a technological breakthrough. We’re watching the digital version of NPR.

Brian C. Joondeph, M.D., is a physician and writer. Follow me on Twitter @retinaldoctor, Substack Dr. Brian’s Substack, Truth Social @BrianJoondeph, LinkedIn @Brian Joondeph, and email brianjoondeph@gmail.com.

All images ai-generated from Grok and ChatGPT